Shifting UI tests to the far left (part 1)

Can UI tests be used as specifications?

Writing specs correctly is a hard thing to do, with insufficient/hidden specs and people communication flaws being the two most common problems1. Moreover, people that have led mobile teams know by experience that it is an even harder problem in the native mobile world due to its duality nature, where engineers from both iOS/Android should understand and implement the exact same thing.

Can we remove some of the complexity involved by writing specs using UI tests and by shifting them to the far left at the specification phase before the development even starts? When can UI tests — or any tests in general — be considered as specifications and how can they reveal behaviours and specs not manifesting in the UI itself that remain hidden when they shouldn’t? Let’s try to find out.

Writing complete and correct specs is a hard thing to do. Why is that?

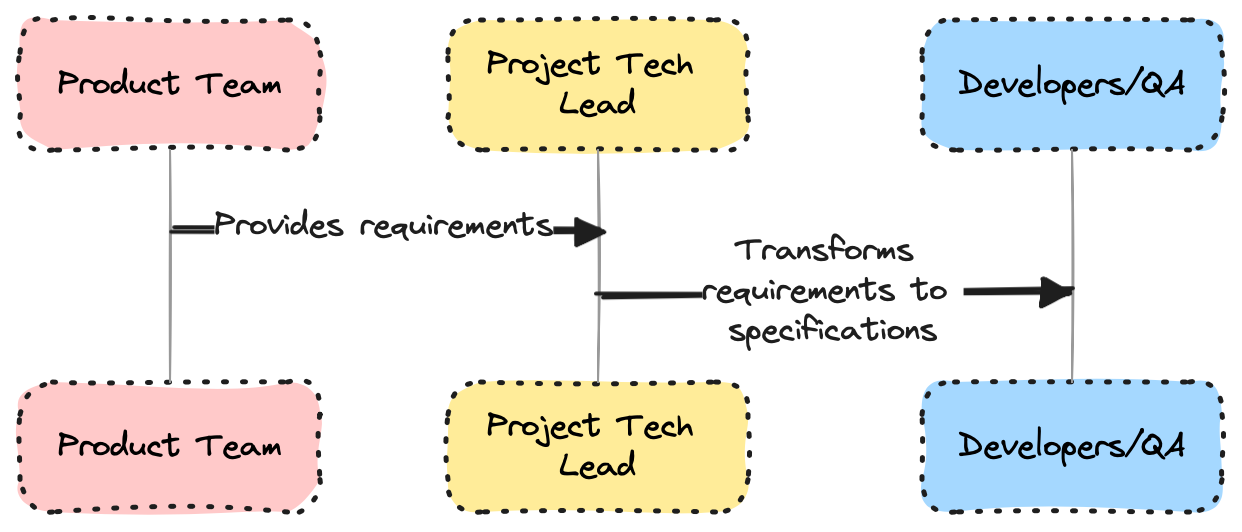

The lifecycle of a feature starts with the requirements. Written in natural language by the product team, they provide a description of the required behaviour and they usually live in a Product Requirements Document (PRD2).

The project tech lead picks up the requirements and transforms them to specifications, which are a more technical response to the requirements document. They are used to let the developers know what to build and the testers know what to test.

This process often fails, leading to time wasted in back and forth and frustatrion pilling up.

People communication flaws

Any process that involves people communication over multiple hops has an inherent communication complexity where people have to understand the exact same thing. A process I have seen failing too may times. To make things even worse, in mobile teams the last hop consists of two teams (iOS and Android), an extra complexity that manifests itself in the QA team quite often reporting implementation inconsistencies between the two platforms3.

Insufficient/hidden specs

Specs are often easy to be missed. Some of them will be discovered or decided only during the implementation phase. For instance, in mobile apps hidden states (loading/error states) are everywhere and often missed in designs. Or the designs themselves say nothing about what happens in the background in terms of API interaction with the backend, leaving room for implementation inconsistencies between Android and iOS to crawl in.

In the mobile world, UI tests are widespread. If we can demonstrate that they are expressive enough4 to effectively capture the various aspects of the mobile app’s behavior, then we could use them to write complete specifications before the implementation even starts, shifting them to the left to the specifications phase.

Acceptance criteria and regression tests at the same

Using UI tests to write specifications has the paramount benefit of them also acting as regression tests, ensuring that the implementation stays aligned with the specifications and leaving little room for divergence.

Under which conditions UI tests can be used as specifications? The 3 conditions

To qualify as specifications, UI tests (and all tests in general) must meet three criteria5:

1. They should not change after a refactor

2. They must be written in a clear, easily understandable format.

3. They should be expressive enough to uncover any hidden requirements.

Looking at a specific scenario

To further explore if UI tests can meet the above conditions and thus be used as specifications, we will use a simple example taken from our application, where we had to migrate to a new backend, requiring when logging in to be authenticated in both old and new backend at the same time during the transition period.

In this scenario, the functional requirement can be expressed in natural language as follows:

When logging in, we should be authenticated at the same time to the old and the new API, allowing us to access endpoints in both systems.

And could potentially be further transformed to a specification in the following BDD scenario:

Scenario: User logs in

Given that an existing user launches the app

When he fills in his credentials in the login page and taps on the login button

Then he should login to both systems and be presented with the home page

How a UI test can meet the 3 conditions and act as a specification?

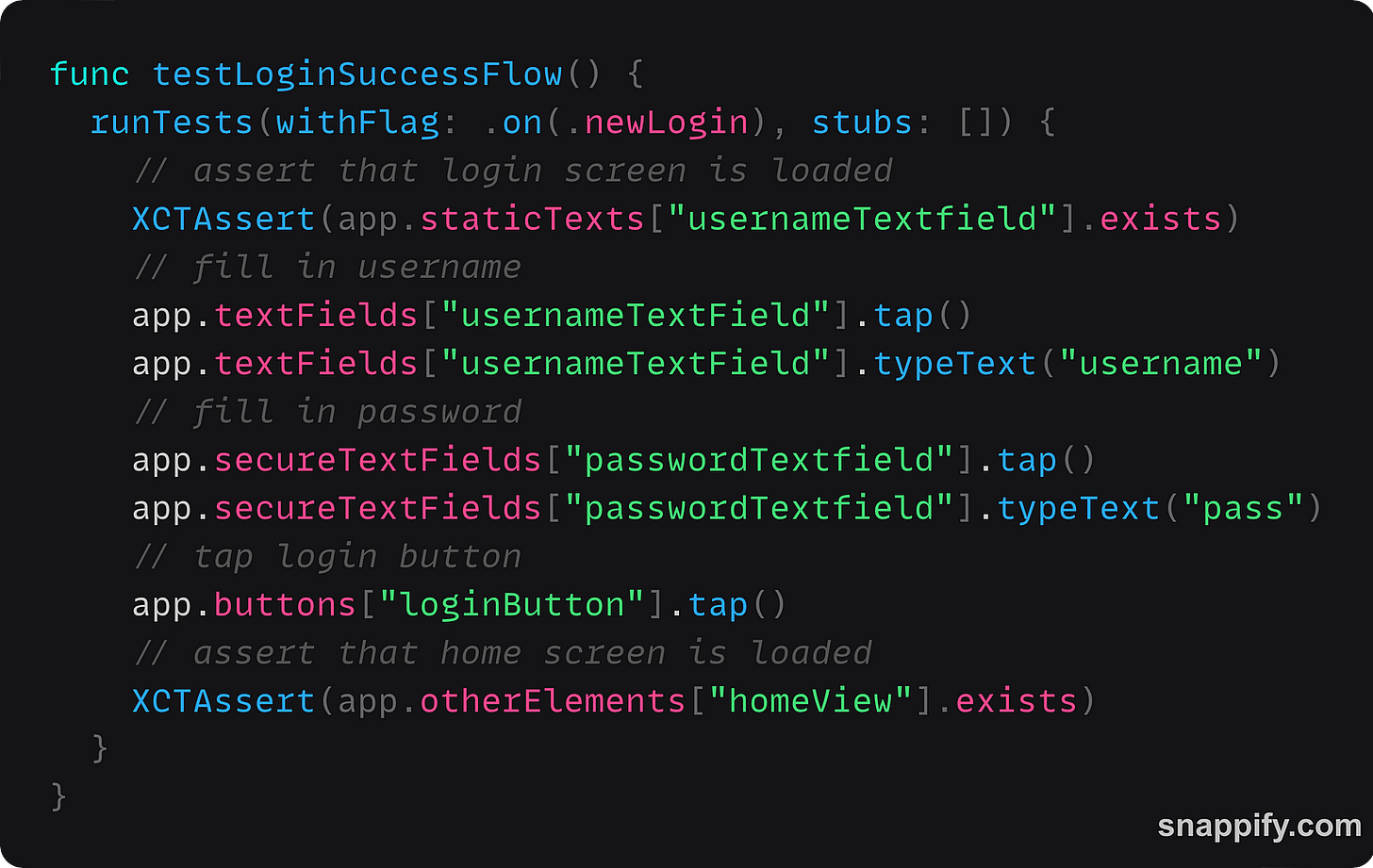

The above specification can surely be re-written as a UI test in the following way.

It is important to note that we should always choose to control the network using stubs (avoiding the flakiness of the real network is a must have for any robust UI test suite) and also any feature flag that might affect our scenarios.

Let’s see how each of the 3 conditions can actually be met:

✅ 1. They should not change after a refactor

It is quite obvious that a UI test changes if and only if requirements change. After all it is nothing more than a translation of the BDD scenario and thus does not depend on the underlying implementation nor does it change when refactoring it. So we can check condition #1 from our list.

✅ 2. They must be written in a clear, easily understandable format

Nevertheless, the problem with the way the above UI test is written compared with the BDD scenario, is that it is extremely more difficult for someone to understand and reason about it. A specification should be easily understood by everybody, non developer people as well.

Using the Page Object pattern (also known as the Robot pattern) we can refactor our UI test into a more declarative human readable format.

The UI test now achieves a level of clarity comparable to the BDD scenario, making it easily comprehensible. After all, as a specification it must be effectively communicated to others and be understandable when looking back at it sometime in the future. Thus we can check condition #2 as well.

✅ 3. They should be expressive enough to uncover any hidden requirements

It turns out that, when looked from a different perspective, UI tests really shine in expressing specifications that are missed or remain hidden in mobile apps.

Here are some examples of easy to miss requirements and how UI tests can help in revealing them:

Missed loading states

Well, one thing that is easily missed in the mobile world is defining exactly how the loading state looks like. No more guessing or asking around how loading should look like. We can explicitly define it when writing the spec inside the UI test. And also enforce it by the way when this is implemented!

Missed error states

The same applies for error states or different flows that are triggered by specific API error codes. You can simply control the network response by using stubs and create additional UI tests that define those specs as well. Like in this non-happy path scenario where the login call returns the noLongerSupported API error and the user is asked to force update the app.

Non-happy scenarios are notoriously easy to miss during implementation and extremely time consuming to test. Having a UI test as a specification (along with using network stub responses) is the only way to ensure that this non-happy behaviour will be implemented and will not regress in the future. I call that piece of mind for the whole team 🧘

It turns out that that there are specifications that will never show up in the UI itself. They will remain hidden in what happens in the background. Here are some examples.

Hidden interaction with the API

How our app interacts with the backend, which API calls are made and in what order is definitely a specification that needs to be agreed upon before implementation. And one that can never be exposed as part of a related BDD scenario.

Revealing this hidden spec is actually quite easy. We can record all the HTTP calls that are made during the test execution and use an inline snapshot that flushes and asserts all done up to that point in time.

In our backend migration example, the snapshot would need to assert that authentication is being made to both old and new backend.

Thus, when writing a UI test as a specification, one can also specify which specific endpoints are being called making it pretty clear to the developer what needs to be done in terms of API interaction to support this flow.

I think the above method is actually quite powerful for the following reasons:

It enforces how the interaction with the backend is upon implementation, leaving no room for ambiguity or miscommunication (even for divergence between Android and iOS implementations)

It asserts that nothing changes in the future. It catches unintended deletion or addition of API calls and stops duplicate calls crawling in our flows, which is something that can remain hidden for a long time if something critical is not broken.

It serves as the perfect documentation of the interaction with the server. If at any point in time, somebody asks you what are the API calls involved in a specific flow, especially in complex flows like the login one in our app, you do not have to provide a guess by asking someone with context, or try to remember, or try to follow the code and find the answer, or even open a network proxy and see what is being recorded. And do it twice for Android and iOS… With this technique, you will quickly know the correct answer every time, with 100% certainty. And everybody in the team will know too 😎

Hidden analytics events / audit logs

Using the same method, you could specify and reveal any other business critical operations that happen in the background, like analytics and audit logs. Providing regression detection for those at the same time! P.S. This argument is so strong it never gets old 😈

Using the UI tests in the ways we presented above, it becomes obvious that the expressive power of the UI tests can go beyond the mere specification of user flows, revealing specifications that remain hidden and are difficult to be specified. We can finally check condition #3, the final condition from our list, establishing that UI tests can be used to write specifications for mobile apps.

There are other ways to expand the UI tests and reveal easy to miss or hidden specifications, but it should be clear by now that they can certainly add a lot of value to this dimension and thus become an efficient solution to the problem of missing specifications, the second most important problem when writing specifications.

In part 2 of the article we will explore how one can quickly write UI tests before any coding starts and how this methodology addresses communication flaws, the other important problem when writing specifications.

This tweet from @GergelyOrosz introduced me to PRDs and why they are needed.

I have often found myself triaging such issues and thinking if I should just ignore the lack of consistency between Android and iOS and accept that this might be an insolvable problem.

Various methods are available for writing specifications, including natural language, input-output examples, behavior-driven development (BDD) scenarios, decision tables, state diagrams, flow charts and Harel statecharts. Each method is bounded by its expressive power with the more advanced ones allowing for more accurate modelling of the behaviour of the system, leaving no room for overlooked specifications. Though great tools with great capabilities, none of the above act as regression tests at the same time though. They remain disconnected from the actual implementation.

I have to admit that I have come to these 3 conditions based on hands-on experience. My impression is that treating tests as specifications is a subject that has remained under the radar of the tech community with lots of hidden potential. Maybe there is bibliography for this, maybe there isn’t, but I would argue that at least these 3 conditions are a minimum subset of any set of conditions someone might come up with.